Innovations that will change our lives

The technology company IBM announces five innovations that will influence our lives by 2022. They are based on advances in artificial intelligence and nanotechnology.

"5 in 5" is IBM's name for a list of scientific innovations with the potential to change our lives in the next five years. They are based on the results of market analyses, social trends and projects from IBM research centres around the globe.

Making the invisible visible

In 1609 Galileo developed the telescope and suddenly saw our cosmos with different eyes. He thus proved the previously unprovable - that the earth and the other planets in our system orbit the sun. IBM Research aims to follow suit with novel software and instruments to make the invisible of our world visible from the macro to the nano level. "The scientific community has always developed apparatuses that help us see the world through entirely new eyes. For example, the microscope makes tiny things visible to us, the thermometer helps us measure temperatures," said Dario Gil, Vice President of Science & Solutions at IBM Research. "Now, based on advances in artificial intelligence and nanotechnology, we want to develop a new generation of instruments that will help us better understand the complex, invisible relationships in our world today over the next five years."

A global team of IBM scientists is constantly working to make such inventions from the research centers fit for everyday use. The following five scientific innovations will make the invisible visible over the next five years.

Artificial intelligence gives insight into our mental health

One in five adults in the US today suffers from neurological or mental impairments such as Huntington's disease, Alzheimer's, Parkinson's, depression or psychosis - but only about half of those affected receive treatment. Globally, the cost of treatments for such conditions exceeds those for diabetes, respiratory disease, and cancer: in the US alone, the annual cost is more than $1 trillion.

Many processes in the brain are still a mystery despite the successes in research. One key to a better understanding of the complex interrelationships is language. In the next five years, cognitive systems will be able to draw important conclusions about our mental state and physical condition from the way we speak and formulate. IBM experts, for example, are combining transcripts and audio recordings from patient conversations with machine learning as part of a project to uncover speech patterns in the records that will help accurately predict psychosis, schizophrenia, manic behavior or depression in the future. Currently, the cognitive system that processes this data needs only 300 words to make an appropriate prediction.

In the future, the researchers hope that similar techniques and innovations can also be applied to the above-mentioned clinical pictures or also post-traumatic stress disorders and even autism and attention deficit disorders. To do this, cognitive systems analyse speech, statements, syntax and intonation of those affected. Combined with wearable devices and imaging techniques such as electroencephalography (EEG), a method of measuring the brain's electrical activity by recording voltage fluctuations on the surface of the head, a comprehensive picture of the person emerges, aiding psychologists and medical professionals in diagnosis and future treatments. So what used to be invisible signs will in the future become discernible indicators of whether a patient's disease onset or deterioration is imminent, treatment is working or needs to be adjusted. If mobile devices are also used, patients or their relatives will be able to carry out the relevant examinations themselves at home and thus help to prepare the doctor's appointments.

Novel vision aids combined with artificial intelligence expand visual capabilities

The human eye cannot see more than 99.9 % of the electromagnetic spectrum. Over the last 100 years, however, science has developed appropriate devices that use rays and their energy at different wavelengths to make things visible - examples include radar and X-ray imaging. Although these devices have often been in use for decades, they can still only be operated by specialists and are expensive to maintain and purchase. In five years, appropriate visual aids combined with artificial intelligence will allow us to see wider bandwidths of the electromagnetic spectrum to gain valuable insights into things. An important feature of these innovations: These aids will be portable, affordable and available everywhere.

One application scenario that is currently being much discussed and tested is self-driving cars. With the help of cognitive systems, sudden obstacles or deteriorating weather conditions can be analyzed better and faster than possible today in order to navigate the vehicle safely to its destination. Taking this one step further, what if technologies of this kind are built into our smartphones in the future and can help us indicate the nutritional content of a food item or its shelf life? Or help determine the authenticity of a drug? IBM scientists are already working on a corresponding compact technology platform that will significantly expand our visual capabilities.

Using macroscopy to better understand global interrelationships

The interconnections and complexity of our immediate environment remain hidden from us in the vast majority of cases. With the Internet of Things and its already more than six billion connected devices, this will change permanently: Refrigerators and light bulbs, drones, cameras, weather stations, satellites or telescopes already provide exabytes of additional, previously little-used data every month. After the digitization of information, transactions and social interactions, it is now time to digitize the processes of the physical world. Over the next five years, machine learning algorithms and software will help organize and understand this information from the physical world. This approach is called macroscopy. Unlike a microscope or a telescope, systems being developed for this approach are designed to analyze interactions of things that are visible to the naked eye but cannot be easily put into context.

Take agriculture, for example: By collecting, organizing and analyzing data on climate, soil conditions, groundwater levels and cultivation methods, farmers will be able to select their seeds, determine the right location for fields and optimize yields - without unnecessarily depleting precious groundwater reserves, for example. In 2012, IBM Research began a project with U.S. vintner Gallo that evaluated irrigation methods, soil conditions, weather data from satellites, and other details to ensure the best irrigation for optimal yield and quality for his soils. In the future, such macroscopic approaches will be used everywhere - for example, in astronomy to evaluate data on asteroids, to determine their material compositions more precisely, and to predict collision courses.

Chips become medical laboratories and find triggers for diseases on the nanoscale

Early detection of diseases is crucial for their treatment. However, there are also diseases such as the above-mentioned Parkinson's syndrome or cancer that are difficult to diagnose at an early stage. One possibility for early detection are bioparticles in body fluids such as saliva, tears, blood, urine or sweat. However, since these particles are often 1000 times smaller than the diameter of a human hair, they are extremely difficult to detect.

In the next five years, chips will become tiny medical laboratories that scan our bodily fluids and let us know in time if it's time for a doctor's appointment. The aim of the research is to bundle the necessary examinations, which previously required a fully equipped laboratory environment, onto a single chip. In the future, it will enable users to quickly and regularly read out biomarkers and transfer this information to the cloud from the comfort of their own homes. There, it can be linked with further data from, for example, sleep monitors or smart watches and analyzed by a cognitive system. The combination of different data sets results in a comprehensive insight into the state of health and can identify problematic indicators at an early stage.

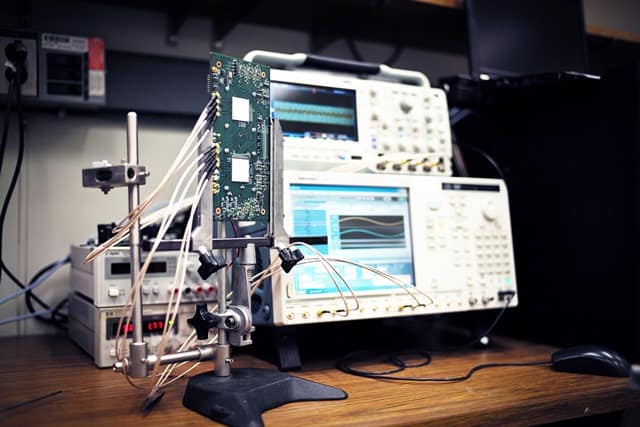

Scientists at IBM Research are already working on a "lab-on-a-chip" nanotechnology that can separate and isolate bioparticles with a diameter of just 20 nanometers, which is on the order of our DNA, from viruses or exosomes.

Intelligent sensors detect pollution in real time

Most pollutants are invisible to the human eye - until their effects can no longer be ignored. Methane, for example, is a component of natural gas, which is actually a clean energy source. However, when methane enters the air before it is burned, it is a major contributor to global warming, along with carbon dioxide. The U.S. Environmental Protection Agency (EPA) estimates that more than nine million tons of methane leaked from natural methane sources alone in 2014. This is equivalent to the amount of greenhouse gases produced by the American iron and steel, cement and aluminum industries combined over the last 100 years.

In five years, new, low-cost sensor technologies will be available to attach to gas wells, tanks, and pipelines, ensuring that the industry detects previously hard-to-find leaks in real time. Networks of Internet of Things sensors will be interconnected in the cloud, monitoring widely dispersed wells and production infrastructure to detect a leak within minutes - rather than weeks. In doing so, they will help reduce spills and the likelihood of disasters.

IBM researchers are already working with gas companies such as Southwestern Energy of Texas to develop a new gas storage system as part of the ARPA-E Methane Observation Networks with Innovative Technology to Obtain Reductions (MONITOR) program to develop a corresponding, intelligent methane monitoring system. The researchers are using silicon photonics - a technology in which data is transmitted between computer chips by light. The advantage: light can transmit far more data in less time than electrical conductors. These chips can be integrated into network sensors directly on site, at other points in the surveillance chain or even in drones. In this way, a complex environmental model can be developed from real-time data that determines the origin and quantity of pollutants at the moment they occur.

More information about IBM 5 in 5: http://ibm.biz/five-in-five (in English)